Unlocking The Power Of Remote IoT Batch Jobs On AWS

Hey there, tech-savvy friend! Let’s dive into the fascinating world of remote IoT batch jobs on AWS. Picture this: you’ve got thousands of IoT devices out there collecting data—tons of it! But how do you make sense of all that information without getting overwhelmed? That’s where remote IoT batch jobs come in. Whether you’re a developer tinkering with a side project or a business leader looking to optimize workflows, AWS offers incredible tools to process large-scale data efficiently. In this article, we’ll break down everything you need to know to get started with remote IoT batch jobs on AWS. By the end, you’ll have a solid plan to set up and manage these jobs like a pro.

As the Internet of Things (IoT) continues to expand at an exponential rate, the importance of efficient data processing cannot be overstated. IoT devices are generating mountains of data every second, and businesses need robust systems to handle, analyze, and store all that information. Enter AWS. With its scalable and cost-effective solutions for remote batch processing, AWS is the ultimate partner for managing these massive data streams. Let’s explore how this works and how you can harness its power for your projects.

In this guide, we’ll deep-dive into the concept of remote IoT batch jobs, how to implement them on AWS, and provide real-world examples to show you just how transformative this technology can be. By the time you finish reading, you’ll have a clear roadmap to set up and manage remote batch jobs for IoT data. Let’s get started!

Read also:Movierulz Kannada 2024 What You Need To Know

Table of Contents:

- What Are Remote IoT Batch Jobs?

- Why Choose AWS for Remote IoT Batch Jobs?

- How to Set Up Remote IoT Batch Jobs on AWS

- Top Tools and Technologies for Remote IoT Batch Processing

- Real-World Example: Smart Agriculture

- Tips to Optimize Remote IoT Batch Jobs

- Scaling Your IoT Batch Jobs

- Managing Costs and Monitoring Performance

- Security Best Practices for IoT Batch Jobs

- What’s Next for Remote IoT Batch Processing?

What Are Remote IoT Batch Jobs?

Alright, let’s get into the nitty-gritty. A remote IoT batch job is essentially the process of collecting, processing, and analyzing massive datasets generated by IoT devices—but here’s the kicker: it’s done in chunks or batches, not in real-time. Unlike real-time processing, which demands instant results, batch jobs give you the flexibility to handle tasks that don’t need immediate answers but require serious computational power. For example, think about analyzing months of sensor data from a smart factory to identify patterns or trends. That’s where remote IoT batch jobs shine!

Key Features of Remote IoT Batch Jobs

Let’s break down what makes remote IoT batch jobs so powerful:

- Scalability: Need more processing power? No problem! You can easily scale up or down depending on the size of your dataset.

- Cost-Effectiveness: Why waste money on resources you don’t need? By processing data during off-peak hours, you can optimize resource usage and save big bucks.

- Flexibility: AWS supports a wide range of programming languages and frameworks, giving you the freedom to implement batch jobs however you like.

Remote IoT batch jobs are game-changers for industries like agriculture, healthcare, and manufacturing. Imagine analyzing vast amounts of data from farm sensors to improve crop yields, or reviewing patient data to enhance healthcare outcomes. These jobs allow you to extract actionable insights that can truly transform your business.

Why Choose AWS for Remote IoT Batch Jobs?

When it comes to implementing remote IoT batch jobs, AWS is the gold standard. Why? Because it offers a comprehensive suite of services tailored specifically for IoT workflows. Here’s why AWS is the perfect platform for your batch processing needs:

1. Scalable Infrastructure

AWS doesn’t just offer scalability—it excels at it. With auto-scaling capabilities, you can dynamically adjust the number of instances based on your workload. This means your batch jobs will run smoothly without over-provisioning resources. Efficiency? Check.

Read also:Unlocking The Power Of Iot A Guide To Secure Remote Management With Raspberry Pi

2. Seamless IoT Integration

AWS IoT Core is a dream come true for developers. It integrates effortlessly with other AWS services like AWS Lambda, Amazon S3, and Amazon Kinesis, making it a breeze to build end-to-end IoT solutions. From data collection to analysis, AWS has got you covered.

3. Cost-Effective Pricing

Let’s talk about money. AWS operates on a pay-as-you-go model, meaning you only pay for the resources you actually use. For batch jobs that aren’t continuous, this can lead to significant cost savings. Who doesn’t love saving money while getting top-notch performance?

How to Set Up Remote IoT Batch Jobs on AWS

Setting up a remote IoT batch job on AWS might sound intimidating, but trust me, it’s easier than you think. Follow these steps, and you’ll be up and running in no time:

Step 1: Configure AWS IoT Core

The first step is to configure AWS IoT Core to manage your IoT devices. This involves setting up device certificates, policies, and rules to route data to the right destinations. Think of it as laying the foundation for your IoT ecosystem.

Step 2: Store IoT Data in Amazon S3

Once your data is flowing, it’s time to store it somewhere safe and accessible. Amazon S3 is the perfect solution for this. Not only does it provide secure storage, but it also serves as the input for your batch jobs. It’s like a digital warehouse for all your IoT data.

Step 3: Set Up AWS Batch

Now comes the fun part—processing the data. AWS Batch lets you run batch computing workloads on AWS. Simply configure it to process the data stored in Amazon S3 using custom scripts or predefined workflows. Voilà! Your batch job is ready to roll.

Top Tools and Technologies for Remote IoT Batch Processing

There’s no shortage of tools and technologies that can enhance the efficiency of your remote IoT batch processing on AWS. Here are a few standouts:

1. AWS Lambda

AWS Lambda is a serverless compute service that lets you run code without worrying about provisioning or managing servers. Use Lambda functions to preprocess IoT data before sending it off to AWS Batch. It’s like having a personal assistant for your data.

2. Amazon Kinesis

Amazon Kinesis is a real-time data streaming service that’s perfect for aggregating and processing IoT data before it gets stored in Amazon S3. If you’re dealing with high-speed data streams, Kinesis is your go-to tool.

3. AWS Glue

AWS Glue is a fully managed ETL (extract, transform, load) service that simplifies the process of preparing and loading data for batch processing. With AWS Glue, you can focus on analyzing your data instead of spending hours on data prep.

Real-World Example: Smart Agriculture

Let’s bring this all together with a real-world example. In smart agriculture, remote IoT batch jobs can revolutionize the way farmers manage their fields. Imagine using soil moisture sensors to collect data over several months. That data is then processed in batches to determine the best irrigation patterns for optimal crop yield. Here’s how it works:

- Collect data from soil moisture sensors using AWS IoT Core.

- Store the data in Amazon S3 for batch processing.

- Use AWS Batch to analyze the data and generate reports on irrigation needs.

With this setup, farmers can make data-driven decisions to improve their harvests, save water, and reduce costs. It’s a win-win-win!

Tips to Optimize Remote IoT Batch Jobs

Optimizing your remote IoT batch jobs can make a world of difference in terms of performance and cost. Here are some tips to help you get the most out of your setup:

1. Use Spot Instances

Spot Instances are a game-changer for batch jobs. They allow you to take advantage of unused EC2 capacity at a fraction of the cost. Perfect for jobs that don’t require an exact start time.

2. Leverage AWS Step Functions

AWS Step Functions is your secret weapon for orchestrating complex workflows. It ensures that each step of your batch job is executed efficiently, minimizing errors and maximizing productivity.

3. Monitor Performance with CloudWatch

AWS CloudWatch is your go-to tool for monitoring and logging. Use it to track the performance of your batch jobs and identify any bottlenecks. Knowledge is power, and CloudWatch gives you the insights you need to keep things running smoothly.

Scaling Your IoT Batch Jobs

As your IoT deployment grows, so does the volume of data you need to process. Here’s how to handle it:

1. Enable Auto Scaling

Auto-scaling is your best friend when it comes to handling fluctuating workloads. Enable it to automatically adjust the number of instances based on demand, ensuring your system runs like a well-oiled machine.

2. Implement Data Partitioning

Partitioning your data is another great way to improve processing speed. By distributing the workload across multiple instances, you can crunch through large datasets in no time.

Managing Costs and Monitoring Performance

Managing costs is crucial when implementing remote IoT batch jobs on AWS. Here’s how to keep things under control:

1. Use Cost Explorer

AWS Cost Explorer is your financial dashboard. It provides insights into your spending patterns, helping you identify areas where you can save money. It’s like having a personal CFO for your cloud resources.

2. Set Budget Alarms

Create budget alarms to stay on top of your spending. These alerts will notify you when you’re approaching or exceeding your budget thresholds, giving you the opportunity to make adjustments before it’s too late.

Security Best Practices for IoT Batch Jobs

Security is non-negotiable when it comes to IoT data. Here are some best practices to keep your data safe:

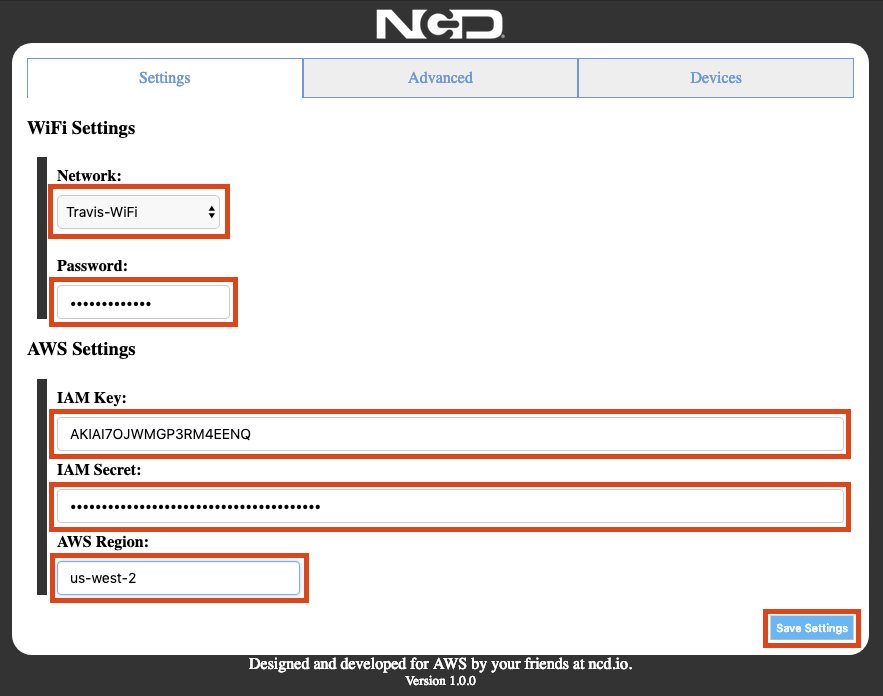

1. Use IAM Roles and Policies

Implement fine-grained access control using IAM roles and policies. This ensures that only authorized users can access your data, reducing the risk of unauthorized access.

2. Encrypt Data in Transit and at Rest

Encryption is your first line of defense. Use it to protect your data both in transit and at rest, ensuring compliance with data protection regulations. Better safe than sorry, right?

What’s Next for Remote IoT Batch Processing?

The future of remote IoT batch processing is bright, with exciting trends on the horizon:

1. Edge Computing

Edge computing is all about processing data closer to the source. This reduces latency and bandwidth usage, making it ideal for time-sensitive applications.

2. AI and Machine Learning

AI and machine learning are set to play a major role in optimizing IoT batch jobs. From predictive analytics to automation, these technologies will help you make smarter decisions faster.

3. Quantum Computing

Quantum computing has the potential to revolutionize batch processing by solving complex problems at lightning speed. While still in its infancy, it’s definitely a trend to watch.

Conclusion

Remote IoT batch jobs on AWS are a powerful solution for processing large-scale IoT data. By leveraging AWS services and following best practices, you can efficiently manage and analyze your IoT data to uncover valuable insights. Remember to optimize your setup, consider scalability, and prioritize security to ensure the success of your IoT projects. So what are you waiting for? Get out there and start transforming your IoT workflows!

We’d love to hear your thoughts and experiences in the comments below. If you found this article helpful, be sure to share it with your network. And for more insights on IoT and AWS, check out our other articles. Happy tech-ing, friend!

Article Recommendations